Evaluating the Impact of Data Augmentation and Regularization On Image Denoising Performance Using Deep Neural Networks

Check out the official PDF for Evaluating the Impact of Data Augmentation and Regularization On Image Denoising Performance Using Deep Neural Networks

⚪ Summary:

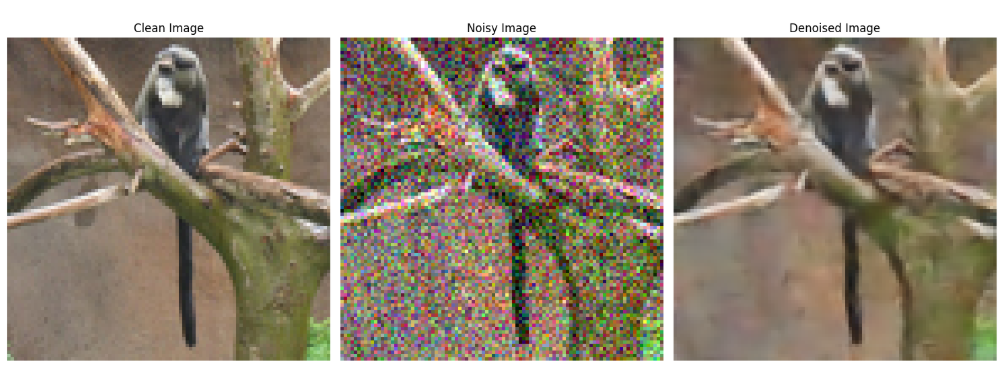

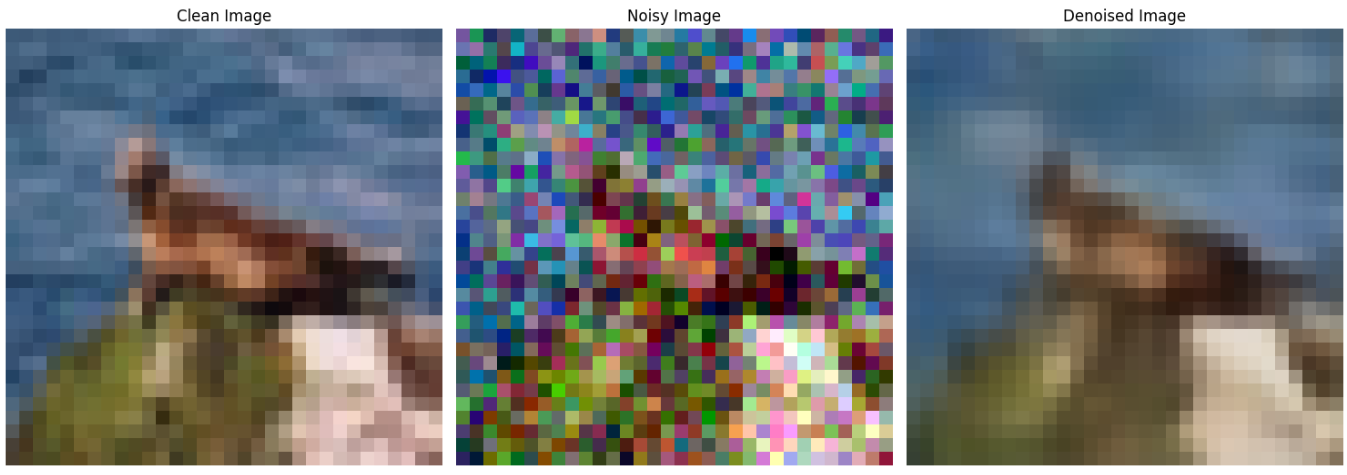

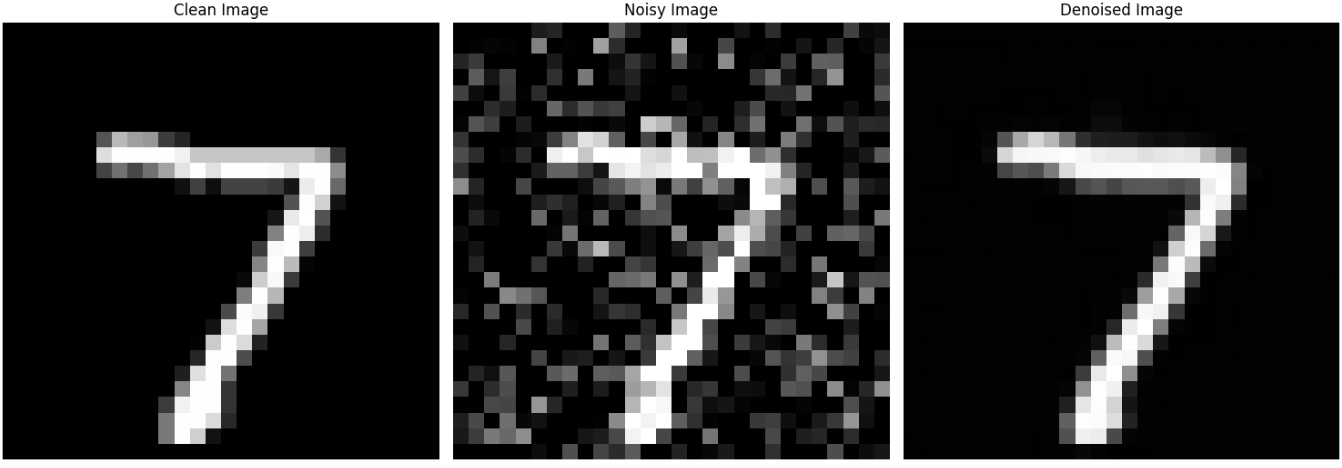

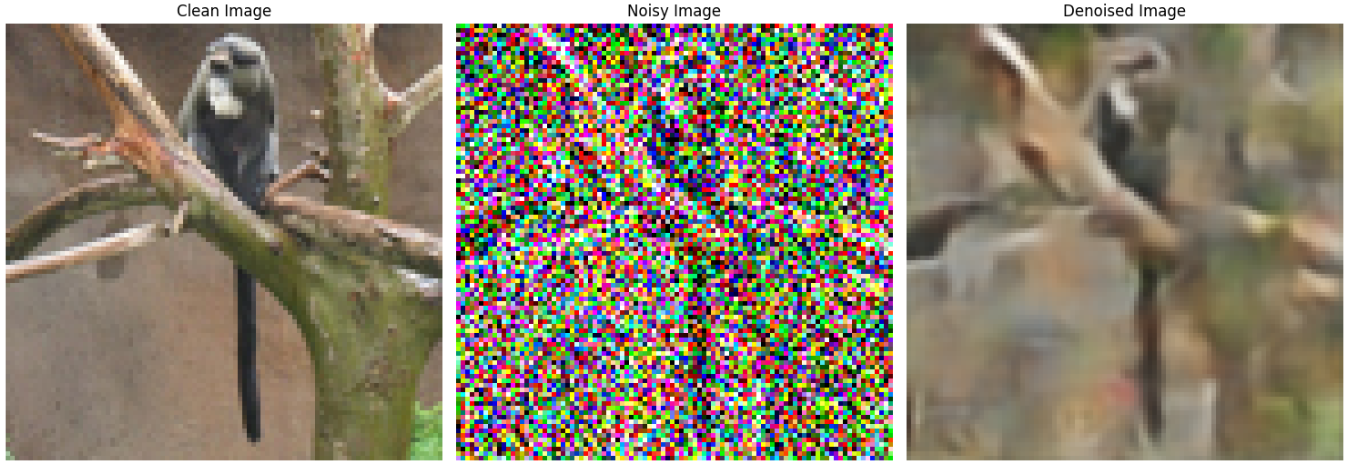

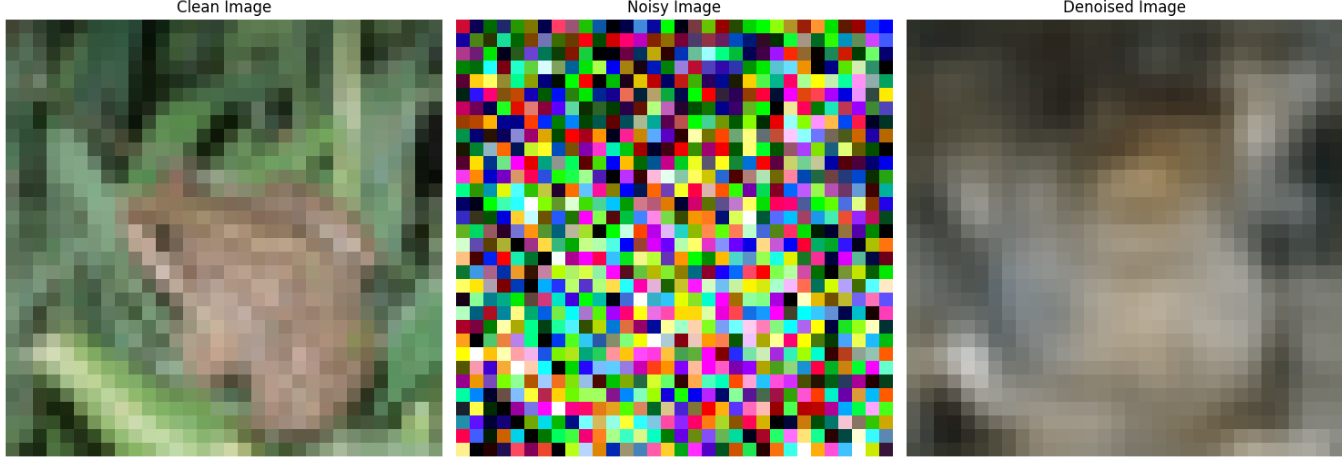

This project explores how training strategies affect pixel-level image restoration tasks. Specifically, we evaluate the effect of augmentation and regularization on convolutional neural network performance for Gaussian noise removal. Experiments are conducted on four datasets (MNIST, CIFAR-10, CIFAR-100, STL-10) with three noise levels (15%, 25%, 50%). Results are reported for augmentations and regularization techniques in isolation and in combination. We identify the best performing methods per noise level and analyze why certain techniques succeed or fail in the context of low-level denoising.

⚪ Problem:

Low-level denoising tasks require precise pixel alignment between noisy and clean image pairs. Unlike classification, many popular augmentation methods such as flipping or cropping harm performance due to misalignment. Likewise, aggressive regularization can oversimplify network weights. Understanding how these strategies affect denoising performance remains underexplored. This project addresses that gap through empirical study.

⚪ Approach:

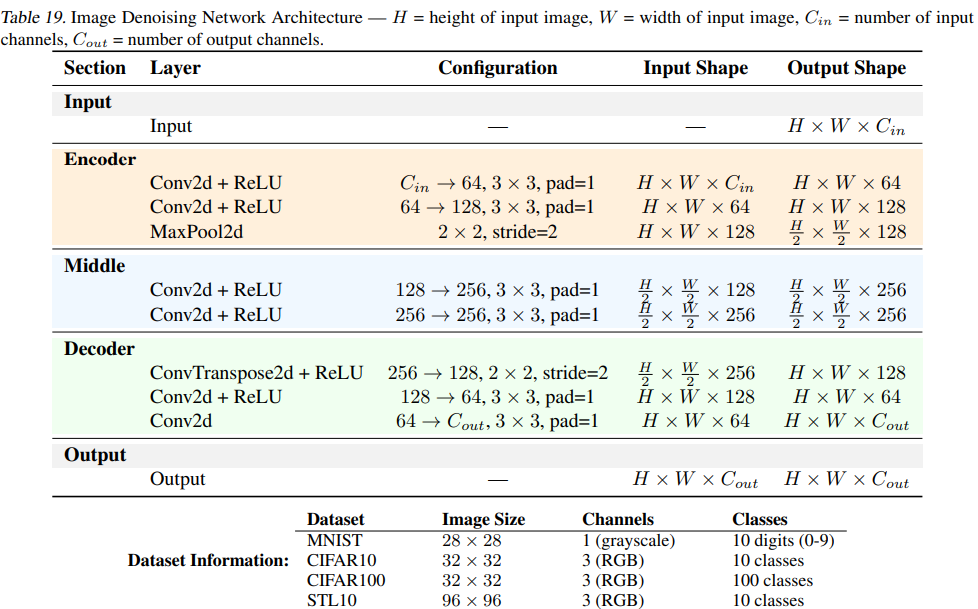

We trained a baseline 7-layer encoder-decoder CNN using MSE loss. Experiments tested:

- 1. 11 augmentations (e.g., Cutout, Gaussian Noise, Flip, Crop)

- 2. 4 regularization methods (L1, L2, Dropout, Early Stopping)

- 1 combined experiment with top-performing methods (Cutout + Gaussian + Early Stopping) Each experiment was run across 4 datasets and 3 noise levels. Metrics include RMSE and visual outputs.

⚪ Results:

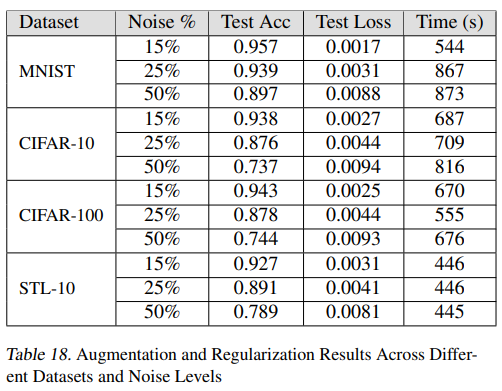

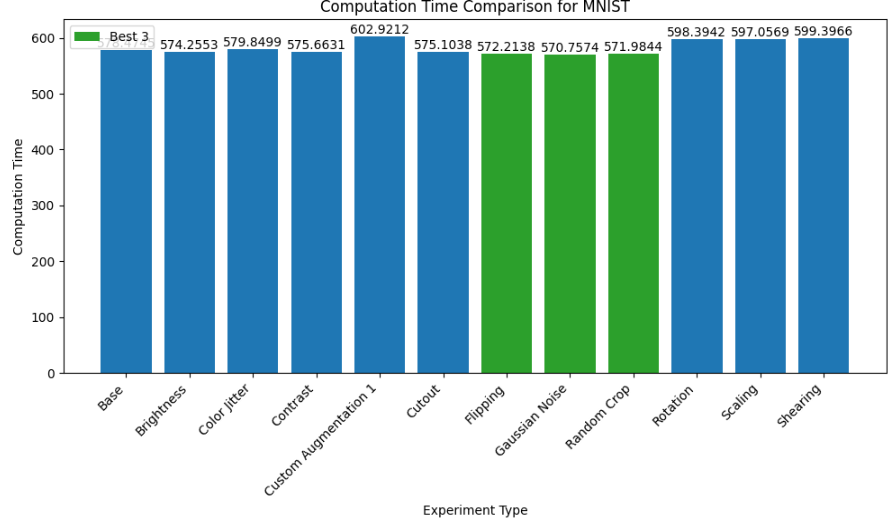

- 3. Cutout and Gaussian Noise were top-performing augmentations across all noise levels.

- 4. Flipping and Random Crop consistently degraded performance.

- 5. Early Stopping was the best regularization technique.

- 6. Combined techniques improved results at high noise (50%), but showed minimal gains at lower noise.

- 7. The Base (no aug/reg) model performed strongly, suggesting over-regularization can be harmful.

⚪ Challenges:

- 8. Maintaining image-label alignment during augmentation.

- 9. Identifying appropriate hyperparameters per method.

- 10. Designing augmentations that improve generalization without compromising structure.

⚪ Future Work:

Explore curriculum-style augmentation schedules (e.g., gradually increasing complexity), domain adaptation between noise types (Poisson, Salt-and-Pepper), and attention-based architectures for adaptive filtering.

▱▰▱ Link: ▰▱▰

💻 GitHub: https://github.com/alexneilgreen/Impact-of-Data-Aug-and-Reg-on-Image-Denoising-Performance-DNN